Statistics:

In the simplest form, Statistics is defined as a numerical representation of information. According to a renowned English statistician Sir Arthur Lyon Bowley, statistics refers to numerical statements of facts in any area of inquiry.

Sir Arthur Lyon Bowley,

also an economist, worked on economic statistics

and pioneered the use of sampling techniques in social surveys.

Statistics is also

seen a branch of mathematics which deals with enumeration data (one

type of numerical data). Statistics is used as a tool in data analysis of a research;

it is used to gather, organize, analyze, and interpret information gathered.

Let’s first

understand what the data is. Data refers

to a set or a bundle of information which is collected in order to conduct a

research. Data is of two types: Numerical, and Non-numerical.

The data that can

be counted is termed as numerical data;

it deals with numbers and calculation, whereas non-numerical data deals with information which cannot be counted

rather it is inferred or assumed.

Though both types

of data (numerical as well as non-numerical) are information, the role of

statistics is confined to “numerical data” only.

Numerical data is

of two types—enumeration data, and metric data. Enumeration data refers to information which can be counted, for

example, class intervals, frequencies, etc. Metric data is based on measurement; it needs unit specification in

order to make sense of data.

Branch of Statistics:

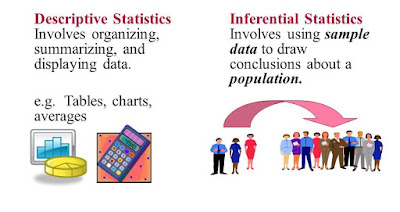

There are two major

branches of statistics—“Descriptive Statistics”, and “Inferential Statistics”.

Descriptive Statistics:

It describes certain characteristics of a group of data. It has to be precise (precise means brief and exact). It limits generalization to the particular group of individuals observed. Hence, no conclusions are extended beyond this group, and any similarity to those outside the group cannot be assumed. The data describe one group and that group only.Inferential Statistics:

It is related to the estimation or prediction based on certain evidence. It always involves the process of sampling and the selection of a small group. This small group is assumed to be related to the population from which it has been taken. The small group is known as the sample, and the large group is the population. Inferential Statistics allows the research to draw conclusions about populations based on observations of samples.Following are the two important goals of inferential statistics:

·

The first goal is to determine what might be happening in a

population based on a sample of the population.

·

And the second goal is to determine what might happen in future.

Thus, inferential

statistics are to estimate and/or to predict. In order to use inferential

statistics, only a sample of the population is required.